Linear algebra: basics and applications

Published

Introduction to linear algebra

Linear algebra is a fundamentally important area of mathematics that deals with vectors, vector spaces and linear transformations. It forms the basis for many mathematical and scientific disciplines and is used in areas such as physics, computer science, engineering and more. Linear algebra is about understanding and manipulating mathematical objects such as vectors and matrices to solve complex problems. A vector is a quantity that has both a magnitude and a direction, while a matrix is an ordered collection of numbers. Linear transformations are mathematical operations that can map vectors into other vector spaces. The basics of linear algebra include topics such as vector addition, scalar multiplication, linear independence of vectors and linear systems of equations. This knowledge forms the basis for more advanced concepts such as determinants, eigenvalues and eigenvectors, which play a decisive role in complex applications and problems. Linear algebra is an indispensable part of modern mathematics and science and provides a powerful tool for analyzing and solving a wide variety of problems.

Linear systems of equations and vector spaces

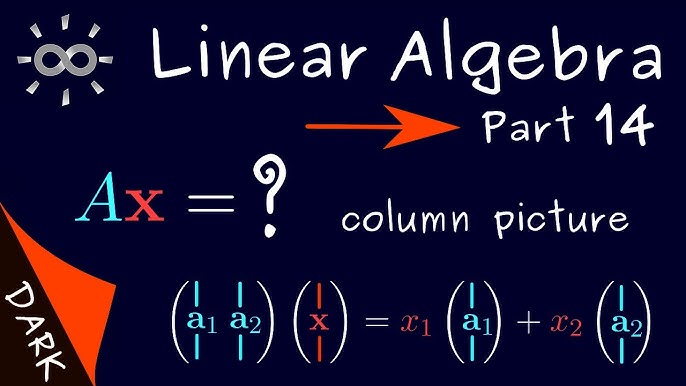

The study of linear systems of equations and vector spaces is a central aspect of linear algebra. Linear systems of equations consist of a collection of linear equations in which unknown variables are combined in such a way that they fulfill certain conditions. These systems can be elegantly represented using matrices and vectors. Solving such systems of equations is crucial as they occur in many applications, from physics to economics. Vector spaces are mathematical structures that consist of vectors and satisfy certain axioms. They are a fundamental concept of linear algebra and are used to formalize the linear properties of vectors. In a vector space, vectors can be added and multiplied by scalars, whereby certain rules apply. This abstract view makes it possible to understand and mathematically model linear transformations and mappings. Vector spaces form a fundamental basis for advanced concepts such as eigenvalues and eigenvectors, which play a prominent role in linear algebra. The study of linear systems of equations and vector spaces is essential for understanding complex mathematical structures and their application in various fields of science and technology.

Matrices and determinants

Matrices and determinants are fundamental concepts in linear algebra and play a crucial role in the representation and manipulation of linear transformations and systems of equations. A matrix is a rectangular arrangement of numbers that is often used to represent systems of linear equations and linear mappings. Matrices can be added, subtracted and multiplied together, subject to certain rules. They make it possible to represent complex mathematical operations in a compact and elegant way. Determinants are special values that are derived from square matrices and provide information about their linear independence and volume change during linear transformations. They are crucial for the calculation of eigenvalues and eigenvectors, which are used in many applications such as physics and computer graphics. Matrices and determinants also provide an efficient way to solve systems of linear equations and describe geometric transformations. Understanding them is therefore essential for the mathematical modeling and analysis of complex systems and structures.

Eigenvalues and eigenvectors

Eigenvalues and eigenvectors are key concepts in linear algebra and are widely used in various scientific and technical fields. An eigenvector of a linear mapping or a matrix is a vector that is only stretched or compressed under this mapping, but retains its direction. The associated eigenvalue specifies the factor by which the eigenvector is stretched or compressed. Eigenvalues and eigenvectors make it possible to reduce complex linear transformations to a simpler form and are of great importance in the solution of linear differential equations, the analysis of vibrations in mechanics and the optimization of algorithms in computer science. They also play an important role in quantum mechanics, where they are used to describe quantum mechanical states. The concept of eigenvalues and eigenvectors provides a powerful method for analyzing and modeling systems undergoing linear transformations and is therefore an indispensable tool in linear algebra.