Mastering data engineering: Creating the foundation for success with data

Published

What is Data Engineering?

Those : www.educative.io

Data engineering is the design, development, and management of the infrastructure, tools, and processes for collecting, storing, processing, and transforming data into a format that can be used for analysis, reporting, and decision-making. This includes tasks such as data entry, data integration, data transformation, data storage and data management to enable efficient and effective data processing.

In today's digital age, data engineering plays a critical role in harnessing the power of data to gain insights, make informed decisions, and achieve business results. Here are some reasons why data engineering is so important:

Data-driven decision making: Data engineering provides the foundation for collecting, storing, and processing large amounts of data that can be analyzed to provide valuable insights for data-driven decisions. This helps companies stay competitive, identify opportunities and overcome challenges in a rapidly changing business environment.

Scalability and Efficiency: Data engineering enables companies to process and manage data at scale and process large amounts of data efficiently and effectively. This is essential in today's data-driven world where data is growing exponentially and companies need to process and analyze data from various sources such as social media, IoT devices and other digital channels.

Data integration and consolidation: Data engineering enables companies to integrate and consolidate data from various sources, such as databases, data warehouses, APIs and external data sources. This creates a unified view of the data, ensuring the consistency, integrity and accuracy of the data, which is crucial for reliable data analysis and reporting.

Data quality and management: Data engineering includes data validation, data cleaning, and data enrichment processes to ensure data quality and integrity. This allows companies to ensure that the data used for analysis and decision making is accurate, reliable and trustworthy. Data governance practices such as data lineage, data cataloging, and data security are also critical to data engineering to ensure data compliance and protect sensitive data.

Advanced analytics and machine learning: Data engineering lays the foundation for advanced analytics and machine learning by providing the necessary data infrastructure and processing capabilities. This enables companies to derive insights from complex data sets, build predictive models, and implement machine learning algorithms for various use cases, such as: B. personalized recommendations, fraud detection and customer segmentation.

Overall, data engineering plays a critical role in effectively managing and processing data, unlocking its value, and achieving competitive advantage in today's data-driven world.

What is the data engineering stack?

A typical data engineering stack consists of several key components, which can vary depending on the specific needs and technologies used by a company. However, common components of a data engineering stack include, but are not limited to:

Data Sources: These are the various data sources from which the data is fed into the data engineering pipeline. This can include structured data from relational databases, unstructured data from sources like social media, semi-structured data from APIs, and more.

Data Entry Tools: These are the tools or technologies used to collect and ingest data from various sources into the data engineering pipeline. This can include tools like Apache Kafka, Apache Nifi, AWS Kinesis, or custom scripts for data ingestion.

Data storage: This component includes storing the recorded data in a suitable data storage solution. This can be traditional relational databases such as MySQL, PostgreSQL, NoSQL databases such as MongoDB, Cassandra or big data storage solutions such as Hadoop Distributed File System (HDFS), Apache Spark or cloud-based data storage services such as Amazon S3, Google Cloud Storage or Microsoft Azure Blob Include storage.

Data Processing and Transformation: This component involves processing and transforming the data into a format suitable for analytics, reporting, and other downstream use cases. This can include data validation, data cleaning, data enrichment, data aggregation, data integration and other data transformation tasks. Popular data processing and transformation tools include Apache Spark, Apache Flink, Apache Beam, and Apache Airflow.

Data Integration: This component involves integrating data from different sources to create a unified view of the data. This can include data integration tools like Apache Nifi, Talend, or custom ETL (Extract, Transform, Load) scripts to move and integrate data from different sources.

Data Modeling and Database Design: This component involves designing and implementing the data model and database schema to store and manage data efficiently. This can include relational database management systems (RDBMS) such as MySQL, PostgreSQL or cloud-based managed databases such as Amazon RDS, Google Cloud SQL or Microsoft Azure SQL Database.

Data management and security: This component includes implementing data governance procedures and ensuring data security. This may include data sequencing, data cataloging, data access controls, encryption, and other security measures to protect sensitive data and ensure compliance with data regulations.

Data monitoring and quality assurance: This component includes monitoring data pipelines, validating data quality, and ensuring data accuracy and integrity. This can include tools like Apache Atlas, Apache NiFi Registry, or custom monitoring and quality assurance scripts.

Data Visualization and Reporting: This component involves visualizing and reporting data for business stakeholders. Tools such as Tableau, Power BI or

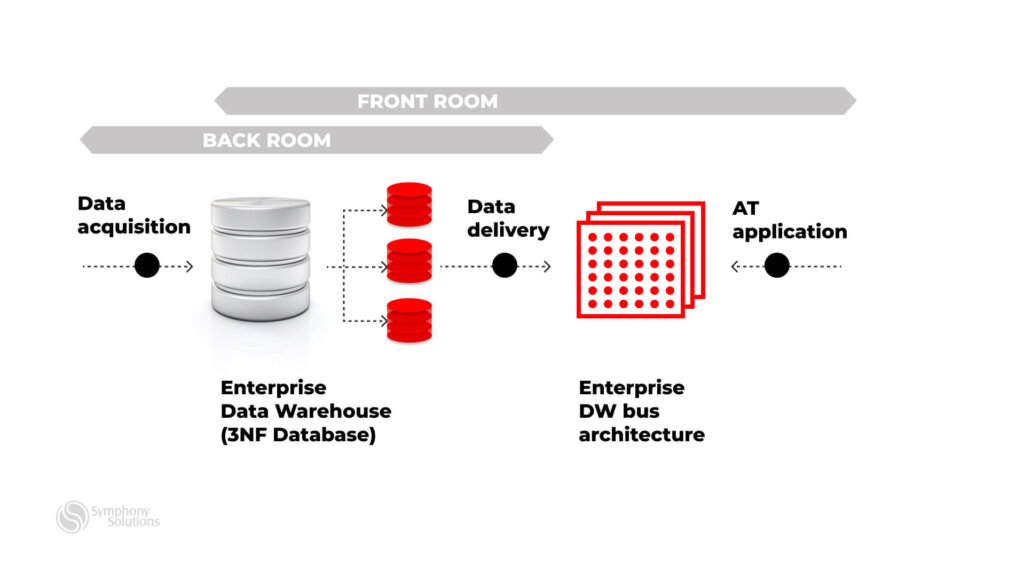

What are the best practices for developing and implementing scalable data pipelines?

Those : symphony-solutions.com

Developing and implementing scalable data pipelines requires careful planning and adherence to best practices to ensure efficient and reliable data processing. Below are some key best practices to consider:

Define Clear Goals: Clearly define the goals of your data pipeline, including data sources, data transformations, and data destinations. This allows you to control the design and implementation process and ensure the pipeline meets the specific needs of your organization.

Choose appropriate data processing tools: Choose the right data processing tools and technologies that are suitable for your specific use case. Consider factors such as data volume, data complexity, and real-time or batch processing requirements. Common data processing tools include Apache Spark, Apache Flink, Apache Kafka, Apache Nifi, and others.

Use distributed processing: Use distributed processing techniques to scale your data pipeline horizontally, enabling parallel processing of large amounts of data. Distributed processing frameworks such as Apache Spark and Apache Flink are designed to process large amounts of data and provide scalability and fault tolerance.

Optimize data storage: Choose appropriate data storage technologies that are efficient and scalable for your data pipeline. Consider factors such as data volume, data structure, and query performance. Common data storage technologies include relational databases, NoSQL databases, data lakes, and cloud-based storage services.

Implement data partitioning and redeployment: Partition and distribute data across multiple processing nodes to optimize processing performance. Shuffling data across nodes should be kept to a minimum as this can result in performance overhead. Consider techniques such as data partitioning, bucketing, and bucketing column formats to optimize data storage and processing.

Monitor and optimize performance: Regularly monitor the performance of your data pipeline and optimize it for efficiency. Use monitoring tools and techniques to identify performance bottlenecks, data skews, or other issues and take appropriate action to optimize the pipeline.

Ensure data quality: Implement data validation and data quality checks at various stages of the data pipeline to ensure data is accurate, complete and consistent. Validate data against predefined rules, business logic, or reference data to identify and resolve data quality issues.

Implement error handling and fault tolerance: Design your data pipeline to handle errors and failures appropriately. Implement error handling mechanisms such as retries, logging, and alerts to detect and resolve errors. Consider implementing fault tolerance techniques such as replication, backup, and disaster recovery to ensure data durability and availability.

Plan for scalability and growth: Design your data pipeline to scale and adapt to changing data volumes and processing needs. Plan for future growth and consider factors such as: b.

How to ensure data quality and integrity in data engineering workflows?

Ensuring data quality and integrity is critical in data engineering workflows to ensure that the data processed is accurate, complete and consistent. Here are some best practices to ensure data quality and integrity in data engineering workflows:

Data Validation: Implement data validation checks at different stages of the data engineering workflow to validate the data against predefined rules, business logic, or reference data. This can include data type validation, range validation, format validation, and other business-specific validation checks to ensure that the data meets expected quality standards.

Data profiling: Perform data profiling to understand the characteristics of the data, such as: B. Data distributions, data patterns and data anomalies. Data profiling helps identify potential data quality issues, such as: E.g., missing values, duplicate data, or inconsistent data, and allows for appropriate remedial action.

Data Cleansing: Implement data cleaning techniques to clean and correct data quality issues. This can include techniques such as data deduplication, data standardization and data enrichment to ensure data is consistent and accurate.

Data transformation and enrichment: Implement data transformation and enrichment processes to ensure data is transformed and enriched as required for downstream processing. This may include data normalization, data aggregation, data enrichment with external data sources, and other data processing techniques to ensure data integrity and consistency.

Data tracking and documentation: Create data sequence and documentation to track the origin, transformation, and flow of data throughout the data engineering workflow. This helps understand data flow, identify potential data quality issues, and ensure data integrity at every stage of the workflow.

Data Quality Monitoring: Implement data quality monitoring processes to continuously monitor the quality of data in the data engineering workflow. This can include automated data quality checks, data profiling, and data validation against predefined quality standards. Data quality monitoring helps identify and resolve data quality issues in real-time and ensures data remains accurate and consistent.

Data governance: Implement data governance practices to establish data standards, policies and procedures for data engineering workflows. This includes defining data quality rules, data ownership, data documentation and data linkage. Data governance helps ensure that data is processed according to established quality standards and compliance requirements.

Error Handling and Logging: Implement robust error handling and logging mechanisms to capture and log any data quality issues or processing errors that occur during the data engineering workflow. This helps in timely detection and resolution of data quality issues and ensures data integrity and consistency.

Test and Validate: Perform

What are the challenges and considerations when dealing with big data in data engineering projects?

Those : educba.com

Dealing with big data in data engineering projects can present unique challenges and considerations due to the sheer volume, speed, and variety of data. Below are some challenges and considerations to keep in mind:

Scalability: Big data projects require scalable solutions that can efficiently process large amounts of data. This includes designing data pipelines that can scale horizontally, using distributed computing frameworks such as Apache Spark, Apache Flink or Apache Hadoop, and using cloud-based solutions that automatically adapt to data loads.

Performance: Processing and analyzing large amounts of data can be time-consuming and performance becomes a crucial factor. Optimizing data engineering workflows for performance, such as: Such as reducing data movement, optimizing data storage and retrieval, and using parallel processing techniques, is essential for timely and efficient processing of big data.

Data integration: Big data projects often involve integrating data from various sources, including structured and unstructured data, streaming and batch data, and data from multiple systems. Data integration challenges include data format compatibility, data ingestion, data transformation, and data consolidation from different sources, which requires careful consideration and planning.

Data storage and processing: Big data projects often require special considerations for data storage and processing. This may include using distributed file systems such as Hadoop Distributed File System (HDFS), columnar databases such as Apache Cassandra, or cloud-based storage solutions such as Amazon S3 or Google Cloud Storage. Choosing the right data storage and processing technologies that meet project requirements is critical.

Data management: Managing data in big data projects can be very complex due to the volume and variety of data involved. Ensuring data privacy, data security and compliance with regulations such as GDPR or HIPAA is challenging. Implementing data governance policies, data masking techniques, encryption, and access controls to protect sensitive data is critical in big data projects.

Data quality: Maintaining data quality in big data projects can be challenging due to the sheer volume and variety of data. Ensuring data accuracy, completeness and consistency is becoming increasingly difficult when dealing with big data. Implementing robust data validation, data profiling, and data cleaning techniques is essential for ensuring data quality in big data projects.

Complexity of data processing: Big data projects often involve complex data processing tasks, such as: B. Real-time data processing, complex data transformations and machine learning algorithms. Tackling the complexity of data processing tasks, optimizing algorithms for large data sets, and handling complex data flows requires careful consideration and planning.

Cost Considerations: Big data projects can involve significant infrastructure costs, including storage, per

What are the common tools in data engineering?

Modern data engineering workflows rely on a variety of tools and technologies to efficiently process and manage large amounts of data. Here are some of the most popular:

Apache Spark: Apache Spark is a widely used, open-source, distributed data processing framework for big data analytics. It provides a unified data processing engine for batch processing, stream processing, machine learning and graph processing, making it a versatile tool for modern data engineering workflows.

Apache Kafka: Apache Kafka is a distributed streaming platform used for building real-time data streaming pipelines. It provides a distributed and fault-tolerant architecture for processing large amounts of data streams in real time, making it a popular tool for building modern data processing pipelines.

Apache Airflow: Apache Airflow is an open source platform for orchestrating complex data workflows. It provides a scalable and extensible framework for designing, planning, and monitoring data pipelines, making it a popular solution for managing complex data processing workflows.

Apache Flink: Apache Flink is an open source stream processing framework that provides powerful event-time processing capabilities for processing real-time data streams. It supports batch processing, stream processing, event time processing, and state management, making it very popular for modern data engineering workflows.

AWS Glue: AWS Glue is a fully managed extract, transform, and load (ETL) service provided by Amazon Web Services (AWS). It offers a serverless approach to building and managing data pipelines in the cloud, making it very popular for cloud-based data engineering workflows.

Google Cloud Dataflow: Google Cloud Dataflow is a managed stream and batch processing service provided by Google Cloud. It provides a unified programming model for processing data in batch and real-time, making it very popular for building data processing pipelines in the cloud.

Apache NiFi: Apache NiFi is an open source data flow management tool that provides a web-based interface for developing, managing, and monitoring data flows. It offers a visual and intuitive approach to building data pipelines, making it a popular tool for data processing workflows.

Apache Cassandra: Apache Cassandra is a highly scalable and distributed NoSQL database designed to handle large amounts of data across multiple nodes and clusters. It is popular for use cases that require high availability, fault tolerance, and low latency data access, making it suitable for modern data engineering workflows.

Apache Beam: Apache Beam is a unified open source programming model for batch and stream processing of data. It provides a high-level API for creating data processing pipelines that can run on various data processing engines such as Apache Spark, Apache Flink and Google Cloud Dataflow, making it a popular tool for creating portable data processing workflows.

SQL-based databases: SQL-based databases, such as B. MySQL, PostgreSQL and Microsoft SQL Server

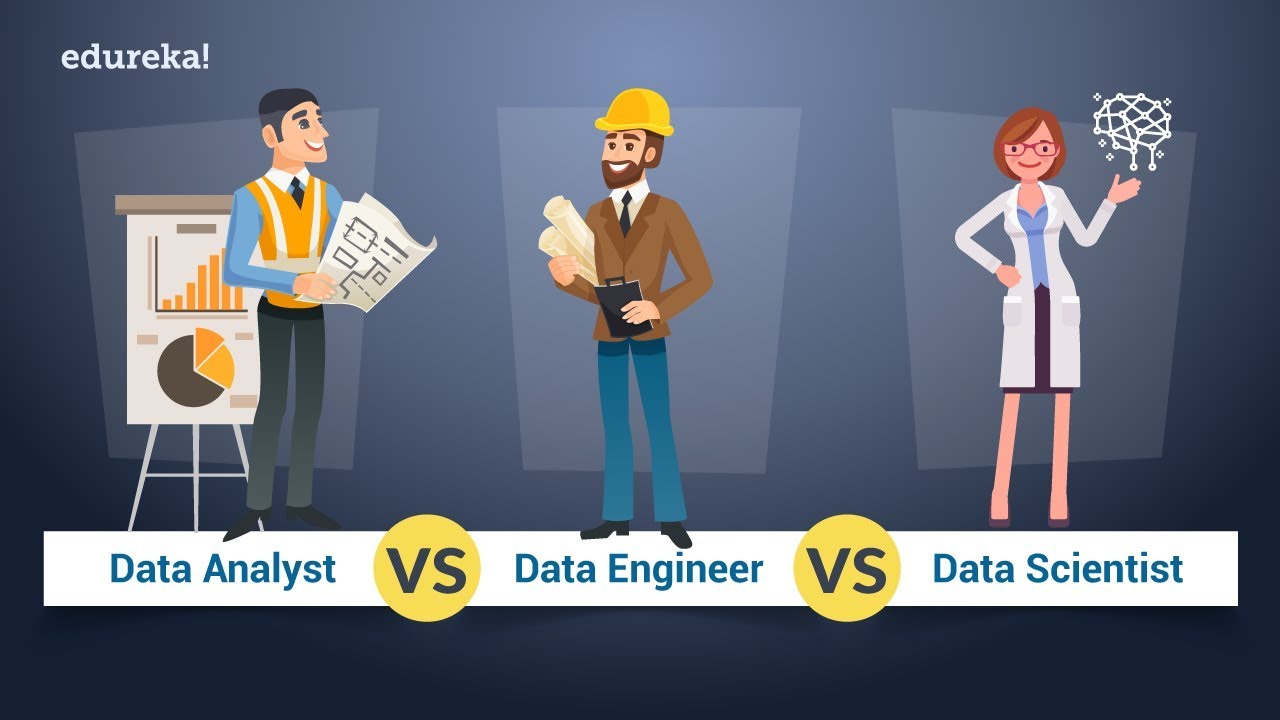

How is data engineering different from data science and data analytics?

Those : edureka.co

Data engineering, data science and data analytics are three different but interrelated areas in the field of data-driven decision making. Here are the main differences between them:

Data engineering: Data engineering involves the design, development and management of data pipelines, data architectures and data infrastructures to enable efficient and reliable data processing. Data engineers are responsible for building scalable and robust data pipelines that collect, store, transform, and make data available for further analysis. Data engineering focuses on the efficient handling, storage and processing of data to ensure its availability, quality and integrity for downstream data consumers.

Data Science: Data science involves the application of statistical, mathematical, and machine learning techniques to analyze data and derive insights, patterns, and trends. Data scientists use tools and techniques to explore and model data, build predictive models, and generate insights that support decision-making. Data science focuses on extracting knowledge and insights from data to support business goals, identify patterns and trends, and make data-driven decisions.

Data Analysis: Data analysis involves the process of examining, cleaning, transforming, and visualizing data to uncover insights and trends. Data analysts use various techniques and tools to examine, analyze, and interpret data to support decision making. Data analytics focuses on understanding data patterns, trends, and relationships to identify opportunities, optimize processes, and gain business insights.

In summary, data engineering focuses on the design and development of data pipelines and infrastructure, data science involves the application of statistical and machine learning techniques to data analysis, and data analytics involves the process of examining and visualizing data to generate insights to win. Although these areas are interconnected and often work together, they have different roles, responsibilities and skills. Data engineers provide the foundation for data scientists and data analysts to effectively analyze and derive insights from data. Data scientists and data analysts use the data provided by data engineers to perform data analysis and make business decisions.

What are the best practices for data modeling and database design in data engineering?

Data modeling and database design are important aspects of data engineering because they form the basis for storing, organizing, and querying data efficiently and accurately. Below are some best practices for data modeling and database design in data engineering:

Understand the data requirements: Start with a thorough understanding of the project’s data requirements. Work with data controllers, e.g. B. business analysts and data scientists to gather requirements, define data entities, attributes, relationships and expectations for data quality. This ensures that the data model and database design align with business needs and goals.

Choose the right data model: Choose the appropriate data model that best fits the data requirements and use case. Common data models used in data engineering include relational, dimensional, hierarchical, and NoSQL models. Each model has its strengths and weaknesses, and choosing the right model is crucial for efficient data storage and retrieval.

Normalizing and denormalizing data: In relational data modeling, normalization is a technique for eliminating redundancies and inconsistencies in data. However, denormalization can be used strategically to improve performance in certain scenarios, such as: B. for read-intensive workloads. Choose a balance between normalization and denormalization based on data access patterns and performance requirements.

Plan for scalability and performance: Consider the scalability and performance aspects of the data model and database design. Optimize data structures such as indexes, partitions, and clustering keys to ensure efficient data retrieval and storage. Plan for horizontal scalability as needed to accommodate future data growth.

Ensuring data integrity and consistency: Implement data integrity and consistency checks such as: B. Primary and foreign key constraints, unique constraints, and data validation rules to ensure data accuracy and consistency. Enforcing data validation and cleansing rules during data ingestion to prevent invalid or inconsistent data from entering the database.

Plan for data security: Incorporate data security best practices into database design, such as: B. Authentication, authorization, encryption and auditing to protect sensitive data from unauthorized access and ensure data protection and compliance with data regulations.

Consider data partitioning and sharding: Plan data partitioning and sharding strategies to distribute data across multiple nodes or clusters to improve performance and scalability. Partition data based on logical or physical attributes, such as: B. time, geography or customer to optimize data retrieval and storage.

Plan for data backup and recovery: Implement robust data backup and recovery mechanisms to protect against data loss due to hardware failures, software errors, or other unforeseen events. Perform regular data backups and test data recovery processes to ensure data durability and availability.

Documenting and maintaining D

How do you handle data integration and data transformation tasks in a data engineering pipeline?

Those : en.wikipedia.org

Data integration and data transformation are critical steps in a data engineering pipeline because they involve extracting, cleaning, and transforming data from various sources into a format suitable for storage, analysis, and consumption. Here are some common approaches and best practices for handling data integration and data transformation tasks in a data engineering pipeline:

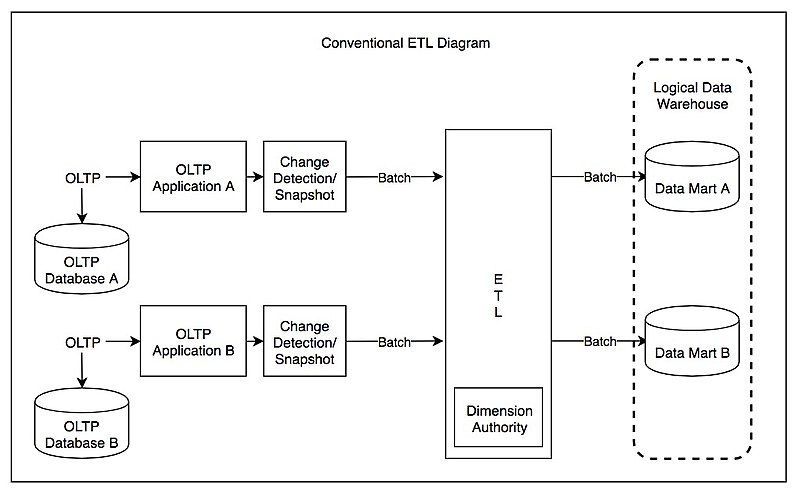

Extract, Transform, Load (ETL): ETL is a common approach to data integration and transformation. In this approach, data is extracted from different sources, converted into a common format or structure, and loaded into a target system, such as: B. a data warehouse, a data lake or a database. ETL tools and frameworks such as Apache NiFi, Apache Airflow or commercial solutions such as Informatica PowerCenter or Microsoft SQL Server Integration Services (SSIS) are commonly used to automate the ETL process.

Data Transformation: Data wrangling is a process of cleaning, structuring, and transforming data to make it suitable for analysis. Data wrangling tasks typically include data cleaning, data validation, data enrichment, data aggregation, data enrichment and data enrichment. Data wrangling can be performed using dedicated data wrangling tools such as Trifacta or OpenRefine, or using data visualization tools with data preparation capabilities such as Tableau or Power BI.

Data Streaming: Data streaming is an alternative approach to data integration and transformation where the data is processed in real-time or near-real-time as it is fed into the system. Data streaming frameworks such as Apache Kafka, Apache Flink or Apache Spark Streaming enable processing of data in real time and enable low latency data processing and analysis.

Data orchestration: Data orchestration involves the coordination and management of data integration and transformation tasks across various systems and processes. Data orchestration tools like Apache Airflow provide capabilities for defining, scheduling, and monitoring data workflows, including data extraction, transformation, and loading tasks. This enables efficient management and coordination of complex data engineering pipelines.

Data quality and validation: Ensuring data quality and validation is an important aspect of data integration and transformation. Implement data validation checks such as: B. Data type validation, range validation, uniqueness validation and consistency validation to ensure that the data is correct, complete and consistent. Data quality tools such as DataWrangler, Great Expectations or Apache Nifi can be used to automate data validation and data quality checks.

Data Mapping and Transformation Rules: Define clear data mapping and transformation rules to ensure consistent data transformation across different sources and formats. Data mapping defines the relationships between data elements in different data sources, and data transformation rules determine how the data should be transformed from the source to the target format. Document and maintain data mapping

What are the most common mistakes to avoid in data engineering projects to ensure successful data processing and analysis?

Data engineering projects can be complex and challenging, and it is important to be aware of the common pitfalls and mistakes to avoid for successful data processing and analysis. Here are some common pitfalls and mistakes to avoid in data engineering projects:

Poor data quality: Poor data quality can lead to inaccurate, inconsistent, and unreliable results. Validating and cleaning data at every stage of the data engineering pipeline, including data entry, data transformation, and data storage, is critical. Implement data validation checks, data cleaning techniques, and data enrichment processes to ensure data quality.

Lack of data governance: Data governance refers to the management and monitoring of data assets, including data integrity, data security, data protection and data compliance. It is essential for data engineering projects to adopt appropriate data governance practices to ensure that data is managed effectively and compliantly. Develop data governance policies, implement data security measures, and comply with data protection regulations to avoid data-related issues.

Insufficient scalability and performance: Data engineering projects often process large amounts of data, and scalability and performance are critical factors that need to be considered. Insufficient scalability and performance can lead to slow processing times, increased resource utilization, and inefficient data processing. Optimize data processing algorithms, leverage parallel processing, and plan for horizontal scaling to ensure efficient data processing.

Lack of documentation and metadata management: Proper documentation and metadata management are essential to understanding and maintaining data engineering pipelines. Failure to document data sources, data transformation rules, data mapping, and other relevant information can lead to confusion, errors, and difficulty in troubleshooting and maintaining data engineering workflows. Thoroughly document data engineering processes, maintain metadata, and establish appropriate documentation procedures.

Inadequate error handling and monitoring: Errors can occur in data engineering processes, such as: B. Errors in data entry, data conversion or data storage. It is crucial to implement robust error handling mechanisms such as: E.g., logging, alerts, and exception handling to catch and resolve errors in a timely manner. Monitoring data engineering pipelines and proactively resolving issues can prevent data-related issues and ensure smooth data processing.

Lack of collaboration and communication: Data engineering projects often involve different teams working together, e.g. E.g. data engineers, data scientists and business representatives. A lack of effective collaboration and communication can lead to misunderstandings, misinterpretations, and misaligned expectations, which in turn lead to data-related issues. Encourage collaboration between diverse teams, establish clear communication channels, and align expectations to ensure success.